From typing to prompting: The Australian Public Service learns to talk to machines

It used to be simple: type a request, wait for a reply. Now, Australian Public Service (APS) personnel are learning a new language, the language of prompts. Generative AI platforms like Copilot are not just tools; they’re collaborators. But getting useful, accurate, and nuanced responses depends on how you ask. And that’s where the art of prompting begins! Learn from Parbery Manager Ben Thew.

As Generative AI (GenAI) platforms like Copilot make their way into APS offices, APS personnel are discovering both the promise and the peculiarities of prompting machines to do human work. GenAI assists with tasks like summarising documents and drafting correspondence. It works tirelessly, though not flawlessly, and hasn’t quite replaced Goose from Top Gun just yet!

GenAI learnings in the APS

A recent study by the UNSW Public Service Research Group, released in 2025, dives into how GenAI platforms are being adopted across Australian public service agencies. It explores the cautious optimism, the risks, and the emerging skillsets (like prompt engineering) that are reshaping how APS personnel interact with technology. They found:

- GenAI platforms are being cautiously adopted for Australian government policy and documentation work, internal tasks like summarising documents, generating drafts, and coding support.

- APS personnel see GenAI platforms as augmenting, not replacing, human capabilities, especially for repetitive tasks.

- External-facing applications raise trust concerns, especially where sensitive data is involved.

- Unauthorised use is common, with staff using personal devices to access more capable GenAI platforms.

- Risks include bias, inaccuracy, data sovereignty breaches, prohibitive costs, and environmental impact.

- Transformational change is limited by incremental pilot projects and lack of strategic direction.

Further recommendations were to:

- Align GenAI strategies with public service values and governance arrangements, including environmental sustainability.

- Build confidence through transparent reporting, evaluation, and human oversight mechanisms.

- Re-examine workforce development to support GenAI augmented policy craft, especially for junior staff and senior executives.

GenAI platforms can enhance tasks such as document summarisation, creation of policy briefs, and exploration of policy options if the right prompts are used. As such, prompt engineering is a foundational skill for APS personnel to use GenAI platforms effectively.

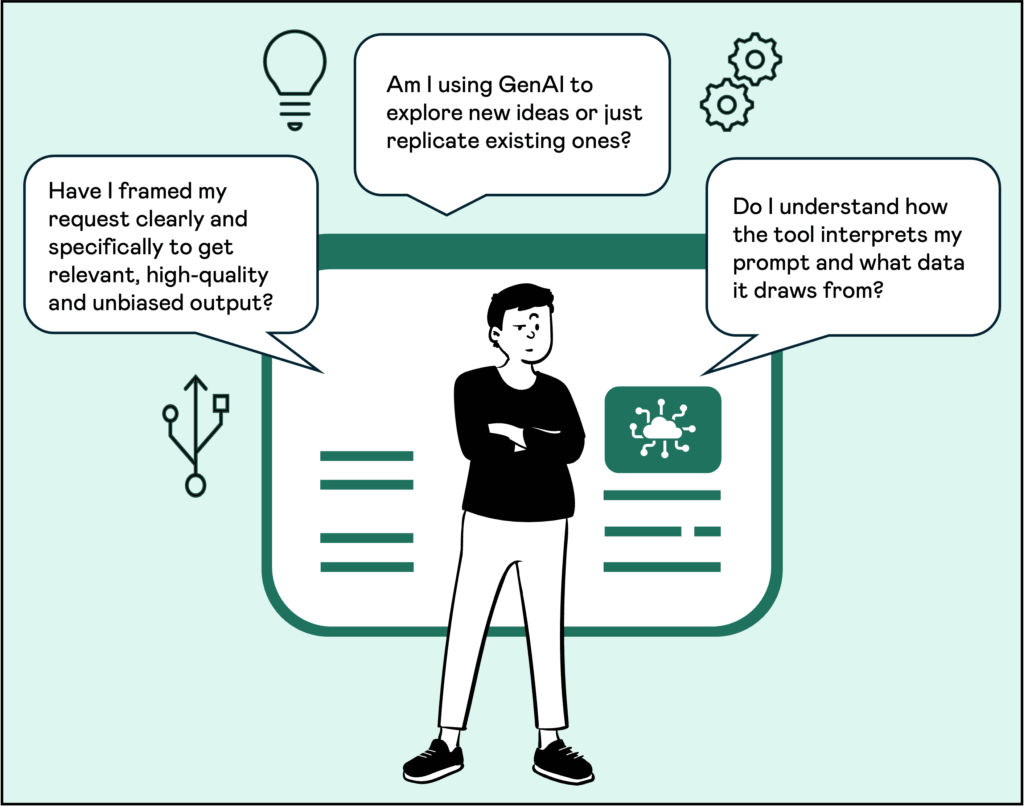

As part of this review, three main concerns were identified by APS personnel regarding the use of GenAI platforms, illustrated below.

- Have I framed my request clearly and specifically to get relevant, high-quality and unbiased output?

- Am I using GenAI to explore new ideas or just replicate existing ones?

- Do I understand how the tool interprets my prompt and what data it draws from?

Why prompt engineering matters

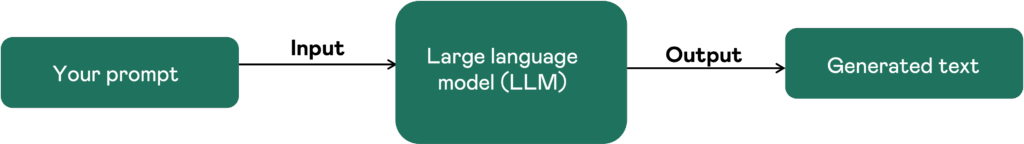

The process of creating effective prompts, referred to as prompt engineering, is important to addressing these frequent questions. Prompt engineering refers to the process of formulating an instruction to obtain a desired output from Large Language Models (LLMs) on GenAI platforms.

The prompt is a natural language query describing the task for a GenAI platform. For text-to-text, text-to-image, or text-to-voice LLMs, prompts can be queries, commands, or longer statements with context and instruction. Prompt engineering includes phrasing queries, specifying style, choosing words and grammar, adding context, or defining characters for the LLM.

For the APS, adopting prompt engineering is a strategic opportunity (not a technical one) to enhance service delivery, policy development, and efficiency while upholding public trust and ethical compliance.

Understanding the power—and limits—of LLMs

Prompt engineering is essential to fully utilise LLM capabilities. It is; however, an emergent field focused on creating and refining prompts to effectively support business and technical applications. LLMs are machine learning models designed to process prompts and generate human-like language text. GenAI platforms operate by analysing large sets of language data, which can include data obtained from the internet.

Prompt engineering helps APS personnel work smarter with the LLM in GenAI platforms. It is about knowing how to ask the right questions, so the GenAI platform gives useful, accurate, and relevant answers whether you are drafting a briefing, summarising a report, or preparing a commercial response.

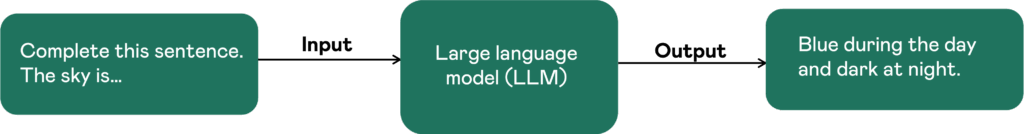

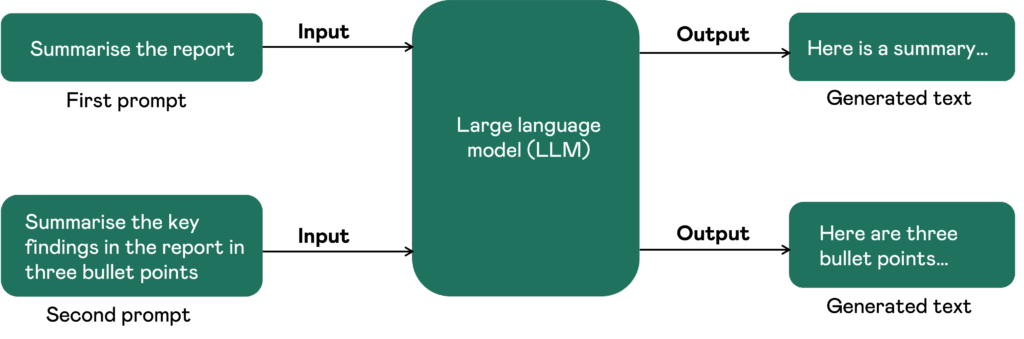

LLMs generate outputs (e.g. generated text) that align with inputs (your prompt) they receive:

By learning prompt engineering, APS personnel can better understand what LLMs are good at (like pulling together information quickly) and where they need help (like interpreting complex policy nuance).

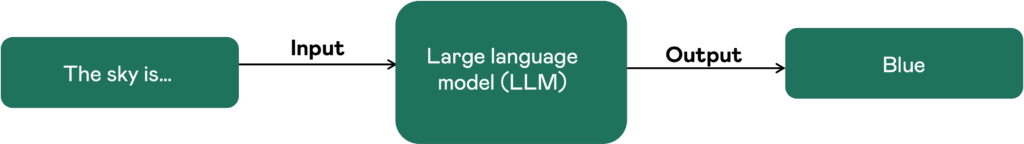

An example of a simple prompt is below:

You can see from the example4 prompt ‘The sky is’ that the LLM continues with words that fit the context like ‘blue’ or even ‘clear.’ It is a simple illustration of how LLMs generate text based on patterns, but without more direction, the output might be vague or unexpected. That is why prompt engineering matters; it helps guide the LLM to produce responses that are accurate, relevant, and fit-for-purpose. This is what prompt engineering is all about!

Let’s try to improve it a bit:

So what happened? Well, with this prompt instructs the LLM to complete the sentence so the result looks a lot better as it follows exactly what you told it to do (‘complete the sentence’). This approach – designing effective prompts to instruct the LLM perform a desired task – is referred to as engineering the prompt.

Limitations without prompt engineering

Although LLMs are highly capable, they possess inherent limitations with generating accurate and contextually relevant responses without effective prompt engineering. These models analyse extensive training data to identify patterns but often struggle to fully interpret nuanced intentions and contextual cues. Without clear and detailed instructions provided through prompts, LLMs may fail to accurately comprehend the specific tasks or objectives required.

Such challenges can lead to outputs that are factually inaccurate, irrelevant, or lacking in depth and specificity. Prompt engineering addresses these limitations by offering explicit guidance, which directs the response generation process and ensures alignment between the output and the desired objectives.

In context for the APS, the content generated by LLMs must be managed with care, especially when used to support public facing services, policy development, or internal decision-making. If prompts are poorly designed or unintentionally biased, the GenAI platform may produce outputs that reinforce stereotypes, reflect discriminatory assumptions, or generate unethical content.

This is particularly concerning in APS settings, where trust, fairness, and accountability are paramount. APS personnel must be aware that LLMs learn from vast datasets that may contain historical biases, and without careful prompt engineering these biases can surface in ways that undermine the integrity of public service delivery or policy advice. Ensuring prompts are clear, inclusive, and aligned with ethical standards is essential to prevent unintended harm and maintain public confidence. The Digital Transformation Agency (DTA) has recently provided interim guidance and standards for Australian Government agencies using GenAI.

In addition, neither publicly available GenAI platforms (such as ChatGPT) nor privately accessible platforms (such as Copilot) have completed security risk assessments or been approved for use with classified or sensitive data. APS personnel must not use these platforms for processing, storing, or generating content that involves protected information unless the platform has undergone formal assessment and received contractual approval.

A comprehensive guide to prompt engineering for APS personnel

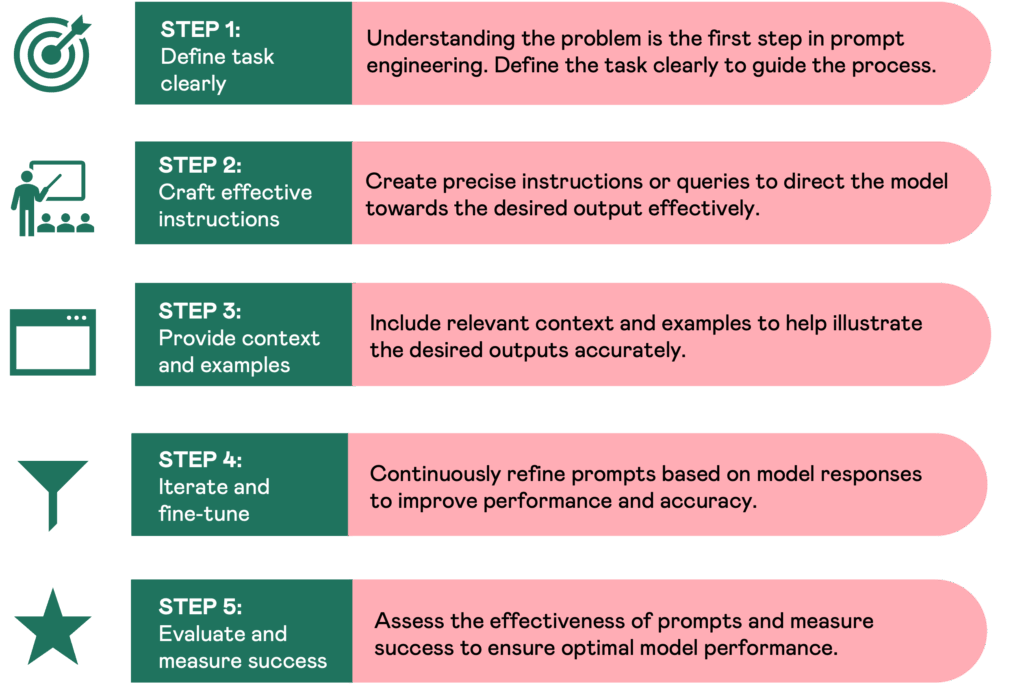

The use of a step-by-step guide4 helps APS personnel use GenAI platforms safely and effectively by ensuring prompts are well-structured, policy-aligned, and risk-aware. The process should follow these steps:

Step 1: Define your task clearly

The first step in the development of a process is to identify your task.

Define the goal and shape the output

Start by deciding what you want the GenAI platform to do: write something, summarise, answer a question? This helps create a prompt that matches the task. Think about what the result should look like: a list, a paragraph, a casual reply? The clearer your goal, the better the LLM can respond. Make sure your objective is realistic and fits what the LLM is good at. It is great at generating text, voice, and images, but not perfect at facts or deep reasoning so tailor your prompt accordingly.

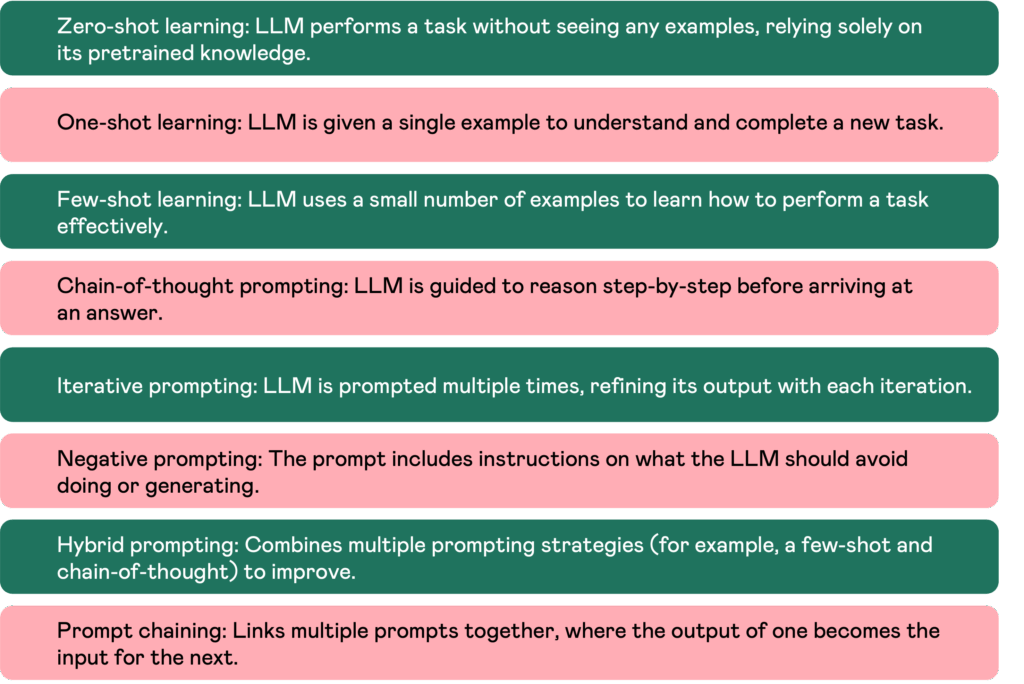

Here are 8 effective prompt engineering techniques that can be used to effectively communicate with LLM. These are:

Check results and keep notes

Once you have written your prompt, look at the output given. Is it accurate, useful, and what you expected? If not, tweak the prompt and try again. It helps to keep notes on what works, especially if you are using GenAI platforms regularly or sharing tasks with others. Documenting your goal with output examples makes it easier to improve your prompts over time and achieve better results.

Step 2: Craft effective instructions

Once you have identified your objective, the next step in the prompt engineering process is to craft effective instructions or queries to guide the LLM.

Understanding the LLMs capabilities and limitations

Before crafting prompts, it is vital to understand what the LLM can and cannot do. These models are excellent at generating coherent text, summarising content, and answering questions based on patterns in data. However, they may hallucinate facts or misinterpret vague instructions. For example, asking ‘What are the latest policies?’ without specifying a domain may lead to generic or outdated responses. Instead, understanding that the LLM lacks real-time awareness helps you frame prompts like ‘Summarise the latest workplace safety policies from 2024.’ This is an example of Zero-Shot Learning, where the LLM is expected to perform a task without prior examples, relying solely on the clarity and specificity of the instruction.

Crafting clear and specific instructions

Clarity and specificity are key to effective prompt engineering. A well-structured prompt guides the LLM towards the desired output. For instance, instead of saying ‘Explain AI,’ you could say ‘Provide a brief overview of how artificial intelligence is used in public sector decision-making.’ This not only narrows the scope, it also sets expectations for tone and content. Similarly, ‘List three benefits of using generative AI in administrative workflows’ is more actionable than ‘Tell me about generative AI.’ These examples demonstrate Few-Shot Learning, where the LLM is guided by a small number of examples or structured cues. You might also use Negative Prompting to instruct the LLM on what to avoid, such as ‘Do not include technical jargon in the summary,’ helping refine the tone and accessibility of the output.

Step 3: Provide context and examples

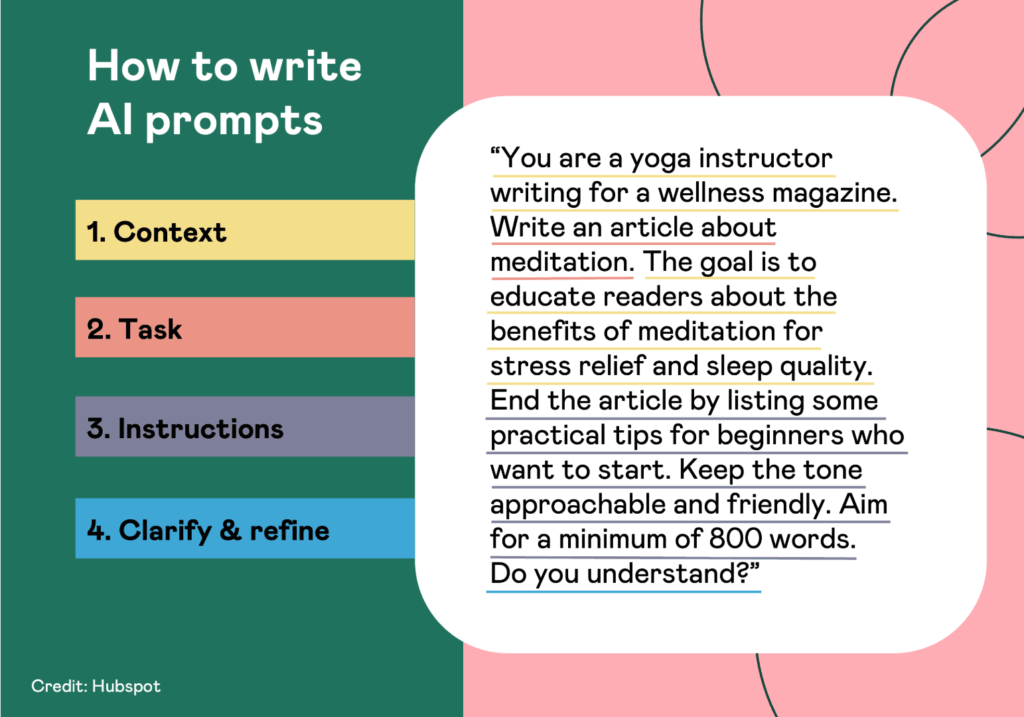

As demonstrated in step 2, writing GenAI platform prompts effectively it begins with Context, which sets the scene for the LLM, ‘You are a yoga instructor writing for a wellness magazine.’ This is a classic use of role prompting and provides the LLM with a persona and purpose. The Task and Instructions sections further clarify the objective and tone, such as authoring an article on meditation with a friendly tone and a specific word count. These elements embed both contextual cues and output formatting, helping the LLM generate more relevant and structured generated text.

The concluding section, Clarify & Refine, reflects the principle of Iterative Prompting. It tests the prompt by asking, ‘Do you understand?’ a simple but effective way to gauge whether the LLM has interpreted the instructions correctly. This mirrors the practice of refining prompts based on output quality, ensuring the LLMs responses align with the intended goals. The diagram above exemplifies how layered context, clear instructions, and iterative feedback loops contribute to more effective prompt engineering.

Step 4: Iterate and fine-tune

Refining prompts iteratively

The decisive step in prompt engineering is all about testing and fine-tuning your prompts to get the best results. Start by monitoring the AI’s responses to check if the generated text matches what you expected. If the output is too vague, too long, or off-topic, that is your cue to make changes. Make gradual adjustments to the wording, structure, or examples you have used – small edits can make a substantial difference. Keep going with systematic iterations, where you test, review, and revise your prompt in a loop to find what works best. It is also worth trying alternative prompt formulations, like rephrasing the question or changing the format, to see if that improves the response. And finally, always check for consistency. A good prompt should give you reliable results every time, not just once.

Prompt engineering is an iterative process. After testing a prompt, review the output and adjust accordingly.

If the LLM gives a lengthy or off-topic response to ‘Summarise the report,’ you might refine it to ‘Summarise the key findings from the report in three bullet points’ as demonstrated below. You can also experiment with format cues like ‘Write a formal email…’ or ‘Generate a policy brief…’ to shape the generated text. This process exemplifies Iterative Prompting, where prompts are continuously refined based on the LLMs generated text.

For more sophisticated tasks, Chain-of-Thought Prompting can be employed to foster step-by-step reasoning, such as, ‘List the steps involved in drafting a policy brief, then summarise each.’ In certain scenarios, combining methodologies works well, such as integrating a few-shot example with a chain-of-thought instruction. This exemplifies Hybrid Prompting.

Step 5: Evaluate and measure success

Measuring prompt effectiveness

Once your prompt engineering solution is in place, it is important to assess how well it performs. Start by defining relevant metrics based on your goals. These could include accuracy, relevance, coherence, creativity, or response length. Assess output alignment with project goals by checking whether the LLM responses meet the intended purpose. This helps you understand if the prompt is guiding the LLM effectively. It is also useful to test against diverse inputs to see how well the prompt holds up across different scenarios. A prompt that performs consistently across varied content is more robust and reliable.

Comparing and gathering feedback

To evaluate success, compare LLM performance before and after prompt engineering. Collect feedback from stakeholders to assess how well the LLM meets expectations and identify areas for improvement. Check response consistency to ensure reliable results over time; Continuous evaluation maintains high-quality LLM outputs.

Six key considerations for APS workflows

APS adoption of prompt engineering methodologies incorporated into GenAI platforms can be effectively achieved through the step-by-step implementation approach. The way APS personnel design prompts can affect the quality, reliability, and ethical considerations of LLM outputs. There are six primary areas that to address:

Prompting as a core skill in GenAI-augmented policy, business and technical craft

GenAI platforms are currently utilised for tasks such as document summarisation, policy brief generation, and the exploration of policy options. These activities require carefully constructed prompts to achieve outputs that are relevant, accurate, and appropriately detailed. Prompt engineering has become an important skill to direct LLMs in addressing the complexities of public policy, business, and technical matters within the APS workplace.

Need for critical AI literacy

Prompt engineering requires more than knowing the right syntax. It requires an understanding of how LLMs interpret input and generate output. The DTA Guidance reinforces the need for APS personnel to develop critical AI literacy, including how to prompt for transparency, cite sources, and mitigate issues with prompting.

Human oversight and prompt framing

The DTA’s principle of “human-centred design” aligns with the need for human-in-the-loop oversight. APS personnel must frame prompts that elicit verifiable, auditable responses and support accountability. This ensures LLM outputs are not only useful but also defensible in APS contexts.

Training and workforce development

The UNSW study and DTA guidance both call for workforce development, which should include prompt engineering as a core capability. Whether extracting insights from large datasets, simulating stakeholder perspectives, or drafting policy documents, prompt engineering enables APS personnel to use GenAI platforms responsibly and effectively.

Tool-specific prompting

As government departments and agencies trial GenAI platforms, APS personnel must adapt their techniques to each platform’s strengths and limitations. This aligns with the DTA’s emphasis on understanding the capabilities and constraints of the GenAI platform before deployment in operational settings.

Ethical and regulatory considerations

Prompt engineering must be aligned with APS values, including data sovereignty, privacy, and fairness. The DTA guidance stresses the importance of designing prompts that avoid reinforcing historical biases or requesting sensitive data. Ethical prompt design is essential for maintaining public trust and ensuring compliance with regulatory frameworks.

Final thoughts

As GenAI platforms become part of the APS toolkit, prompt engineering emerges as a vital skill. It is not just for technical people, but for anyone engaging with AI. It is not about replacing people, it is about empowering people to work smarter, faster, and more ethically.

The APS is learning to talk to machines, and with the right prompts those machines are starting to talk back clearly, responsibly, and in service of the public good.

References

- Hart, J., Dickinson, H., Henne, K., McDermott, V., Rahman, S. & Connor, J. (2025). The future of Generative AI in policy work. Canberra: Public Service Research Group, UNSW Canberra.

- Digital Transformation Agency (DTA) & Department of Industry, Science and Resources (DISR). (2023). Interim guidance on government use of public generative AI tools. Australian Government Architecture. Available at: https://architecture.digital.gov.au/guidance-generative-ai [Accessed 4 Jul. 2025].

- Aryani, A. (2023). 8 Types of Prompt Engineering. Medium. Available at: https://medium.com/@amiraryani/very-useful-and-well-described-article-d6e1ef477802 [Accessed 6 Jul. 2025].

- Bot Penguin. (2024). How Prompt Engineering AI Works: A Step-by-Step Guide. Bot Penguin Blog. Available at: https://botpenguin.com/blogs/how-prompt-engineering-ai-works-a-step-by-step-guide [Accessed 6 Jul. 2025].